Today, the ACLU of Massachusetts published a repository of over 1,400 documents which paint a picture of how government agencies across Massachusetts have been using facial recognition technology in recent years. The repository catalogs the breadth of contexts in which the Massachusetts government has flirted with facial recognition, a dystopian technology that is dangerously inaccurate when used on faces with darker skin, feminine features, younger features, or older features.

These documents are the result of over 400 individual public records requests filed predominantly between 2018 and 2020. They chronicle facial recognition surveillance technologies being marketed to and used in state agencies, municipal police departments and schools, and they include hundreds of solicitations from tech companies aggressively marketing their products to a wide range of government agencies.

Explore the Facial Recognition Document RepositoryHere we present highlights from this document repository, namely:

- The widespread and unregulated use of facial recognition scans run by the Massachusetts Registry of Motor Vehicles, on behalf of law enforcement agencies across the country

- An aggressive and, in some cases, successful law enforcement marketing campaign by dystopian surveillance startup Clearview AI

- Multiple instances of smaller companies partnering with government agencies to deploy dangerous surveillance technologies without public knowledge

Great Power Without Responsibility: Facial Recognition at the RMV

The largest and most comprehensive state or local government facial recognition program in Massachusetts is managed by the Registry of Motor Vehicles (RMV). This system was put in place in 2006 with the original goal of investigating fraud in the licensing process. Almost immediately after obtaining its facial recognition system fifteen years ago, the RMV told policing entities throughout the state that they could send photos of unknown persons to be searched against its statewide license and identification photo database.

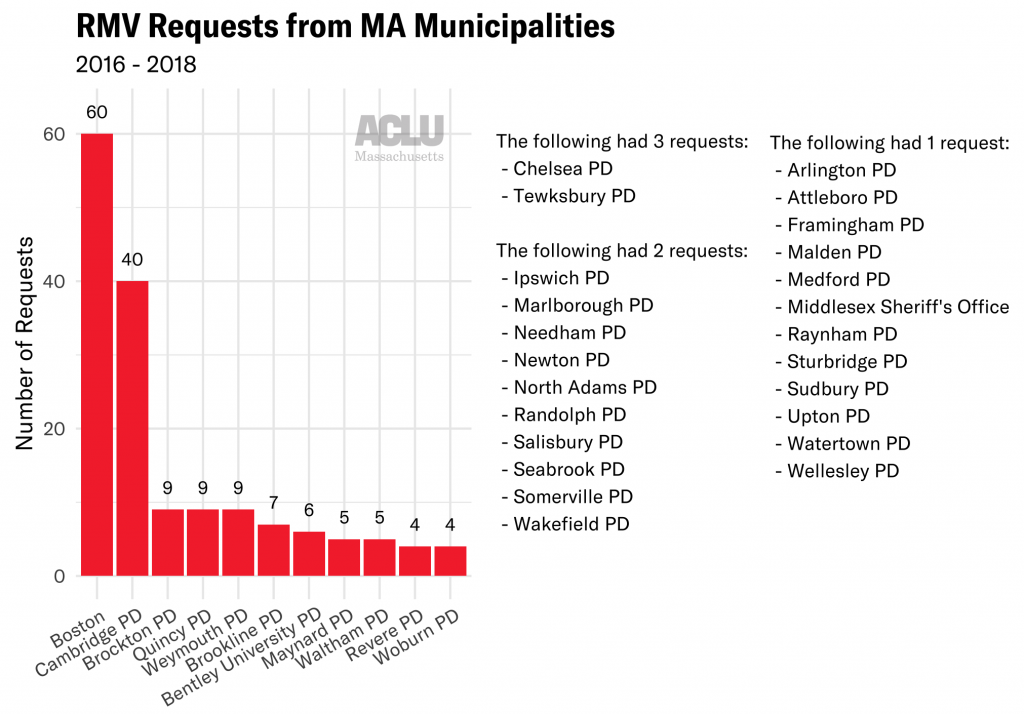

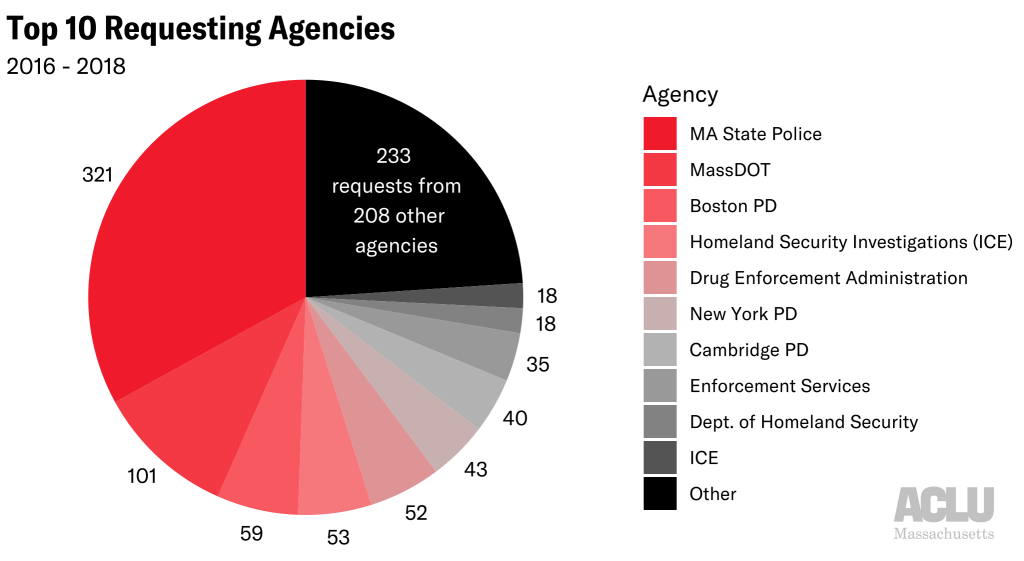

Documents obtained by the ACLU of Massachusetts through a public records lawsuit show that the RMV ran hundreds of scans for police each year, and not just for Massachusetts entities. ICE, the DEA, the Department of Homeland Security, and NYPD all requested scans between 2016 and 2018.

The records show the RMV performed these searches absent any state law or regulations to govern their use, and without any judicial oversight or internal mechanisms to prevent abuse. In order to request a scan, law enforcement and immigration authorities simply sent emails claiming to be from law enforcement, with no verification required. Searches were recorded in written logs only, apparently in red colored pencil, and with spurious reporting standards: over 70 scans were run in 2016 and 2017 on behalf of an employee listed simply as “Karen.” Multiple emails describe scans that were run using photos taken from personal Facebook profiles. According to the records, the RMV did not decline a single search request from any law enforcement official during the time period covered by the records request.

During that period, the RMV facial recognition system was used by a wide range of parties. In Massachusetts, while the Boston and Cambridge Police Departments each submitted dozens of requests, over 30 other departments submitted one or more.

Many officers from out-of-state successfully requested searches, including the South Carolina DMV, the New Hampshire State Police, Rhode Island Police, and the NYPD. Federal agencies took advantage, too: requests appear in the logs from Customs and Border Patrol, the Department of Homeland Security, ICE, the Secret Service, and the IRS.

What was the reasoning behind these scans; were they tied to violent crime investigations, petty theft, immigration, or just an officer curious to dig up dirt on an ex? Did the RMV take steps to mitigate the known racial bias of the software? The RMV’s handwritten logs provide no context. Given that the RMV facial recognition system contains a photo of every individual with a Massachusetts driver’s license or state identification card, these regulatory failures are particularly irresponsible and dangerous.

Dystopia, Get Your Dystopia Here: Clearview’s Marketing Tactics

In 2019, national news stories broke about a terrifying new technology company that links facial recognition results to other content on the internet. With a single facial image, Clearview AI claims it can identify an individual’s photos that are linked to Facebook, Twitter, Flickr, YouTube, and more. Clearview pulls off this freaky feat by scraping billions of images from social media sites— despite being told to cease and desist by multiple companies.

A leak of Clearview’s data in early 2020 revealed widespread use of Clearview by over 2,000 police departments nationally, as well as numerous foreign governments and private companies. Concerned about its adoption in Massachusetts, the ACLU of Massachusetts filed public records requests with every police department in the Commonwealth – 346 in total – to obtain emails mentioning Clearview.

These emails show the company successfully gaining a foothold among Massachusetts law enforcement. At least eight law enforcement agencies statewide accepted Clearview’s invitation to start a free trial of their software: Agawam PD, Marlborough PD, Somerset PD, Wellesley PD, Yarmouth PD, Falmouth PD, Norfolk County Sheriff’s Office, and Oxford PD. In Somerset, the police department originally denied in writing using Clearview until data was leaked revealing one detective had indeed been using a free trial, possibly without the knowledge of department superiors—let alone residents or local elected officials. The detective even used Clearview to run a scan on behalf of an NYPD officer. Such stories demonstrate that in the absence of community control of police surveillance, police and private companies often work together in the shadows to dangerously expand police surveillance tactics.

Records also show an aggressive marketing scheme towards PDs that ultimately didn’t bite: at least 36 Massachusetts police departments received solicitations from Clearview – and probably many more, given that not all record requests were answered. These solicitations were sometimes sent directly from Clearview, but more frequently were sent by publications such as American Police Beat Magazine, Officer.com, or Police Magazine– an indicator of the chilling extent to which facial recognition is marketed to the police. Clearview’s own marketing encourages its users to “take a selfie” or run a search on a celebrity’s photo – behavior that would most likely be barred if the use of the technology were subjected to any sensible rules, regulations, or laws.

Here, There, Everywhere: Facial Recognition Throughout Government

Unfortunately, Clearview and the RMV are just the tip of the iceberg when it comes to government use of facial recognition in Massachusetts. From records requests filed with government offices in over 40 Massachusetts cities and towns, multiple cases arose of small companies peddling dangerous technologies for government use.

In the Town of Plymouth, for example, emails from 2018 reveal extensive communication between the chief of police and Jacob Sniff, the young founder of a billionaire-backed facial recognition startup called Suspect Technologies. At one point during discussion about the Plymouth PD adopting his software, Sniff admits that his product might work just “30% of the time” on poorer-quality images such as those taken from surveillance camera footage. Showing a baffling degree of hubris, Sniff also asks the police department to help him get access to the RMV database – a government system containing personally identifiable information for over 5 million Massachusetts residents. After the ACLU disclosed these emails to the press, the Plymouth Police Department said it would not contract with the company. But these emails show how profit-hungry companies are quietly pressuring even small police departments to buy into unreliable dystopian technologies, and that absent strong law to protect people’s rights and the public interest, police are all too often eager to sign up.

In Revere, we learned that the public school system uses a visitor management software called LobbyGuard that claims to “screen and track everyone who enters your schools.” Though the school district asserts that LobbyGuard does not use facial recognition, the use of such a technology raises concerning questions about privacy and surveillance in school settings, the transfer of sensitive data to little-known corporations, and the role of private companies in expanding government surveillance.

And in the City of Boston, a looming software update from a video analytics company called Briefcam threatened to supercharge all of the region’s networked surveillance cameras with face surveillance software with just the click of a button. Documents from 2019 show that the City upgraded their Briefcam video analytics software to version 4.3. Just a year earlier, Briefcam had launched a new facial recognition feature – in version 5.3. All the City had to do was install a single software update, and the faces of hundreds of thousands of Boston residents and visitors would have begun to be systematically cataloged. Thankfully, a facial recognition ban backed by the ACLU, the Student Immigrant Movement, and many other organizations unanimously passed by the Boston City Council in June makes any such software update illegal in the City of Boston.

What’s perhaps most frightening about these documents is the pattern they suggest: it’s clear that this predatory and biased technology has found fertile ground to take root among Massachusetts government agencies. At least ten municipal police departments have embraced facial recognition technologies provided by unregulated third-party private vendors. And if officers aren’t wary of starting a free trial with Clearview, and police chiefs feel comfortable negotiating contracts with fledgling companies like Suspect Technologies, where would they draw the line?

Without law preventing it, more software trials will be started, more contracts will be signed, and more Massachusetts residents will be endangered. We don’t have to speculate – multiple cases of Black men being wrongfully arrested on the basis of faulty facial recognition scans warn of how this story might end.

These documents further underscore the importance of a newly proposed bill that would impose strict limitations on the use of face surveillance by Massachusetts government agencies. While the state police reform bill that was passed in December 2020 includes some regulations on police use of facial recognition, the newly proposed bill goes much further, prohibiting the vast majority of government agencies from obtaining or possessing the technology and thus protecting Massachusetts residents from aggressive marketing campaigns waged by companies like Clearview and Suspect Technologies. The bill would also enforce a higher standard on police use of facial recognition, requiring a warrant, limiting the technology’s use to serious investigations, establishing key due process protections, and establishing a supervised, centralized process for all facial recognition searches.

We must enact strong and unambiguous law to govern facial recognition technology – because as these documents show, we cannot trust law enforcement and private companies to regulate themselves.

If you’re interested to learn more about how facial recognition might affect your community, we invite you to visit the Press Pause on Face Surveillance homepage, and explore the document repository on the ACLU of Massachusetts’ Data for Justice Site:

Explore the Facial Recognition Document Repository