Data show COVID-19 is hitting essential workers and people of color hardest

Newly released data from the Boston Public Health Commission (BPHC) show that COVID-19 is present at higher rates in certain Boston communities, including Hyde Park, Mattapan, Dorchester, and East Boston. Analysis presented here by the ACLU of Massachusetts compares BPHC's findings to census data, in order to better understand how the COVID-19 pandemic is affecting Boston’s essential workers and communities of color.

COVID-19 Cases Concentrated Among Boston's Essential Workers

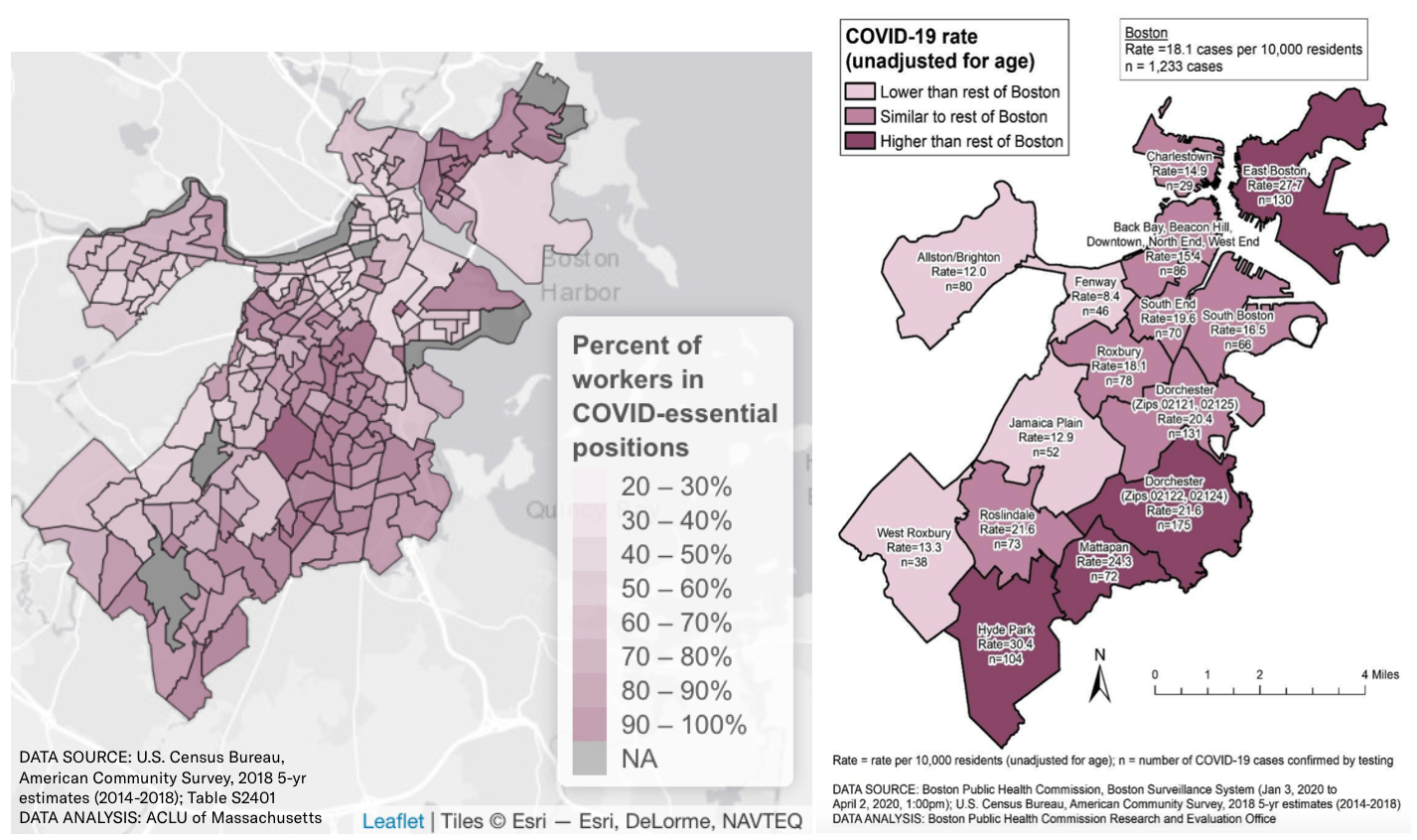

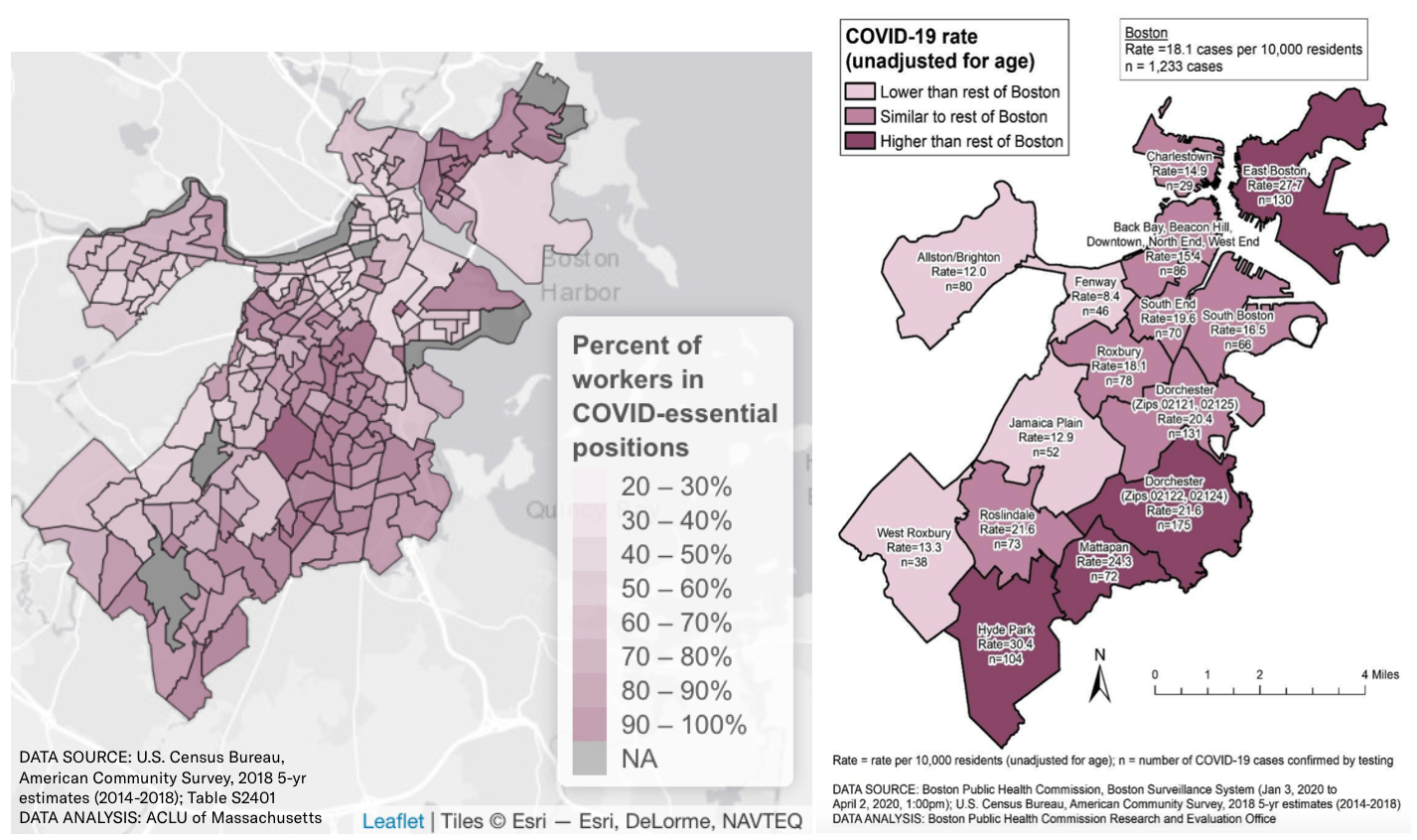

According to the census data, the Boston neighborhoods most impacted by COVID-19 are co-located with the highest proportions of essential workers in the city.

Using data from the U.S. Census 2018 American Community Survey (ACS), we mapped the proportion of workers across Boston who are employed in "COVID-essential" occupations. We defined "essential" occupations to include the following:

- Healthcare practitioners and technical occupations

- Construction and extraction occupation

- Farming, fishing, and forestry occupation

- Installation, maintenance, and repair occupation

- Material moving occupation

- Production occupation

- Transportation occupation

- Office and administrative support occupation

- Sales and related occupation

- Building and grounds cleaning and maintenance occupation

- Food preparation and serving related occupation

- Healthcare support occupation

- Personal care and service occupation

- Protective service occupations

COVID-essential workers are most concentrated in Dorchester, Roxbury, and East Boston – the same neighborhoods where the virus is present at its highest rates.

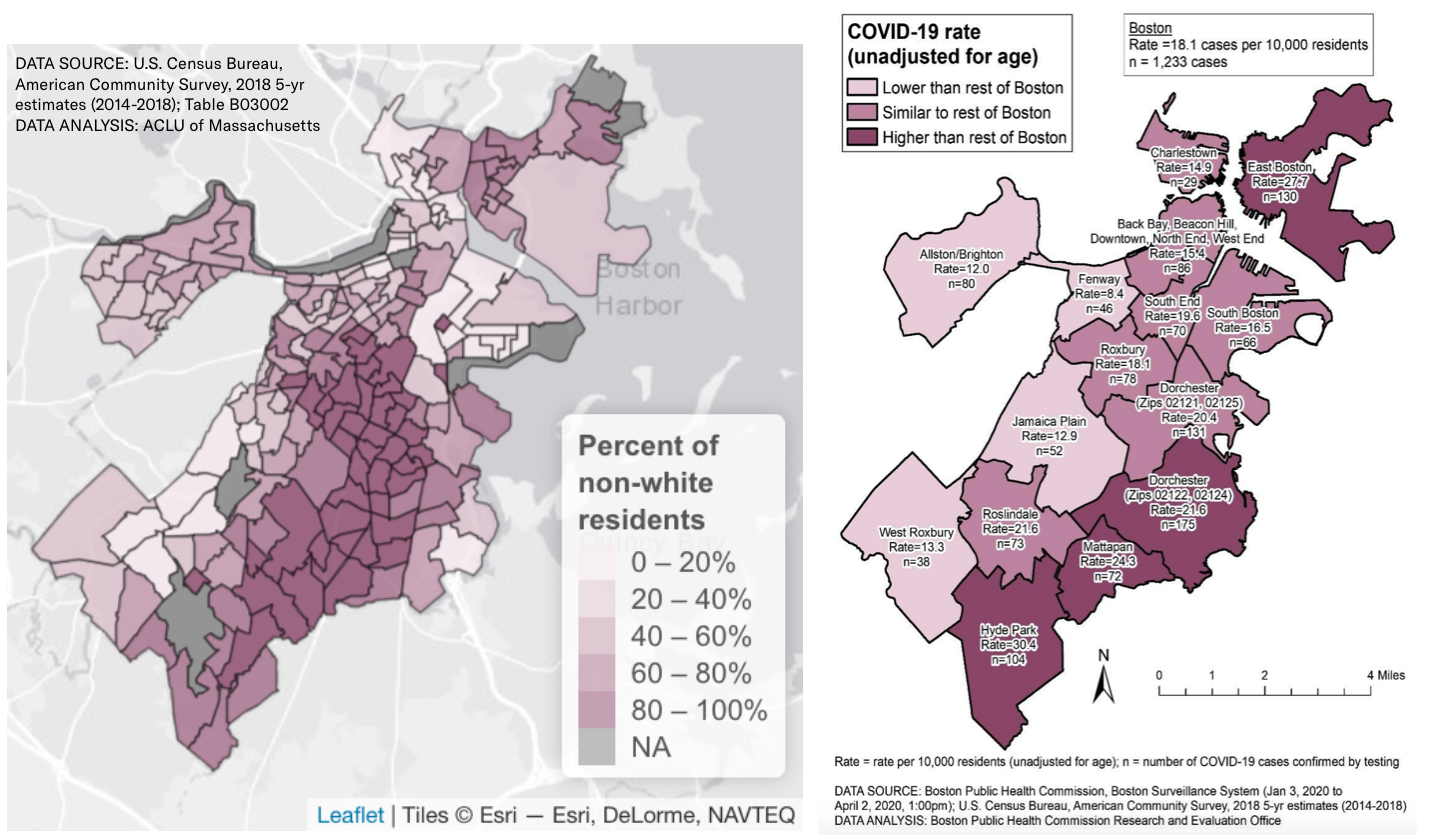

COVID-19 Cases Concentrated in Boston's Black & Brown Neighborhoods

Similarly, the areas with the most COVID-19 cases align with Boston's communities where people of color make up a majority of the population.

Communities like Hyde Park, Mattapan, and Dorchester where over 50 percent of the population is non-white (including African American, Hispanic or Latinx, Asian, Native American, Multiracial, or any racial category other than "White Alone") are again the same communities with the highest rates of COVID-19.

Latinx-Majority Chelsea Hit Hard

As this crisis evolves, more details are coming into focus that show how already-vulnerable communities are those hardest hit by the virus.

As of April 7th, the city of Chelsea had 315 COVID-19 cases in a population just over 40,000. This translates to a rate of about 79 cases per 10,000 residents – over four times higher than the rate in neighboring Boston of 18 cases per 10,000 residents, as reported by the BPHC.

On Monday evening, Massachusetts General Hospital's Chief Equity and Inclusion Officer, Dr. Joseph Betancourt, reported that 35-40 percent of the COVID-19 patients being treated at Mass. General were Hispanic or Latinx.

https://twitter.com/simonfrios/status/1247253763112517637?s=20

In an interview with WBUR, Betancourt mentioned that the outsized effect Chelsea is experiencing could be due to a number of factors, including increased rates of co-habitation and high proportions of residents working in jobs "where social distancing is not possible."

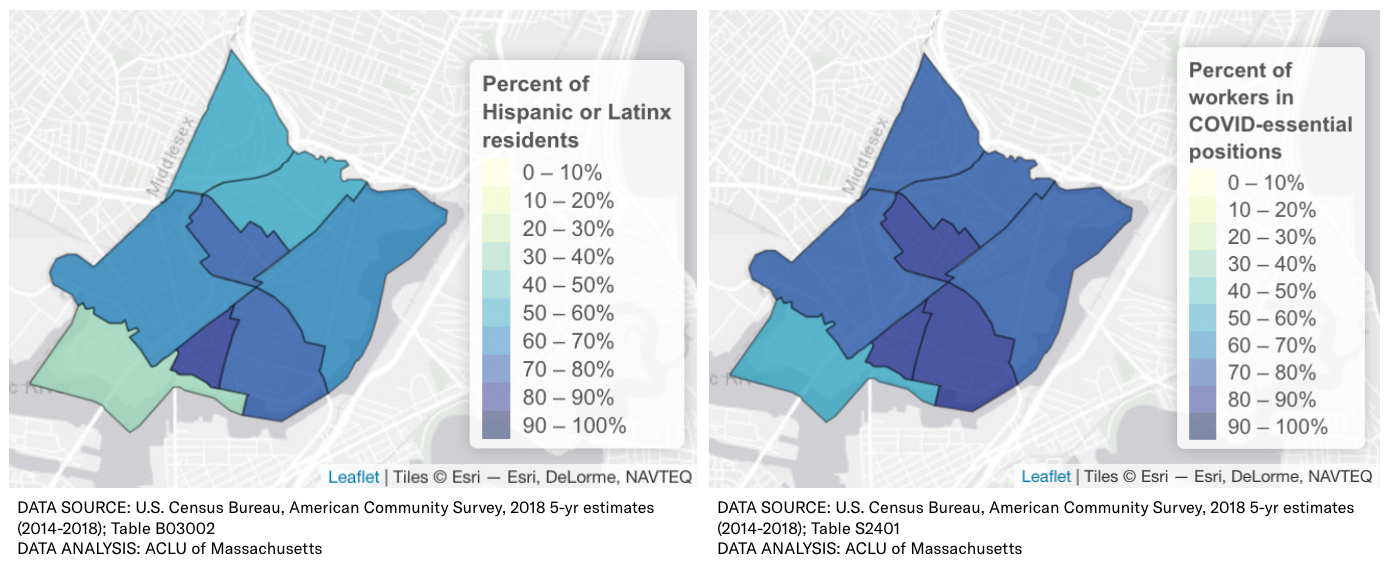

Indeed, our analysis of 2018 ACS data shows that 79.8 percent of all workers in Chelsea are in COVID-essential positions. In some parts of Chelsea, over 80 percent of the employed population work in occupations that are deemed essential during the on-going crisis:

And even within Chelsea, census tracts with the highest proportion of Hispanic or Latinx residents align exactly with the tracts containing the greatest percentage of workers employed in essential jobs.

As editorial writer Marcela García states in her recent Boston Globe piece about Chelsea, “Not only are these immigrants — mostly Latino, many of them here without legal status — the most economically vulnerable, but a high proportion of them already have limited access to health care and other public support networks. Working from home is a privilege that they simply don’t have.” She describes how the COVID-19 outbreak is acting as a “great revealer,” bringing to light systemic inequalities that have plagued minoritized and working-class communities for centuries.

Ultimately, it is communities like Chelsea, with a very high proportion of both COVID-essential workers and residents of color, that are suffering disproportionately in this pandemic. Ironically, a major cause of their increased hardship is the irreplaceable role they play in supporting the continued functioning of all of society, by working essential service jobs.

This is exactly why the ACLU of Massachusetts and other advocacy organizations are pushing for legislative, executive, and judicial action to protect immigrants and working people. While the federal stimulus package passed by Congress in March is a start, it does not go far enough to establish the comprehensive social and economic protections – or the robust data collection practices – required to support vulnerable communities across Massachusetts today.

Our mayors, governors, and representatives must step up. Last week, the ACLU of Massachusetts called on the state Department of Public Health to center equity in its response to the crisis. We must record the race and ethnicity of those receiving tests and treatment for COVID-19, so as to better understand how the virus affects different communities differently. We must ensure that our first responders—including not just EMTs and other healthcare personnel, but also grocery store employees, delivery workers, and public transit operators—have the personal protective equipment (PPE) they need, and have priority access to testing.

The stakes have seldom been higher to get it right on equity. Our leaders must act now in order to save the lives of those working to protect and support us all.

Interested programmers can find the R code used to create these maps on Github.

Edit: A previous version of this blog cited the total percent of essential workers in Chelsea to be 76.9 percent. That number was calculated in error, and has been updated to 79.8 percent.

Building a Better Future for AI

In recent months, headlines about artificial intelligence (AI) have ranged from concerning to downright dystopian. In Lockport, NY, the public school system rolled out a massive facial recognition system to surveil over 4,000 students – despite recent demographic studies which show that the technology frequently mis-identifies children. Ongoing investigations into Clearview AI, the facial recognition startup built upon photos that were illicitly scraped from the web, have revealed widespread unsupervised use by not only law enforcement but banks, schools, department stores, and even rich investors and friends of the company.

But the recent Social Impact in AI Conference, hosted by the Harvard Center for Research on Computation and Society (CRCS), offered another path forward for the future of artificial intelligence.

In just two days, conference attendees shared their work applying artificial intelligence and machine learning to a shockingly wide range of domains, including homelessness services, wildlife trafficking, agriculture by low-literate farmers, HIV prevention, adaptive interfaces for blind users and users with dementia, tuberculosis medication, climate modeling, social robotics, movement-building on social media, medical imaging, and education. These algorithms don’t center around surveillance and profit maximization, but rather community empowerment and resource optimization.

What’s more, many of these young researchers are centering considerations of bias and equity, (re)structuring their designs and methodologies to minimize harm and maximize social benefit. Many of the researchers voiced concerns about how their work interacts with privacy and technocratic values, not shying away from difficult questions about how to responsibly use personal data collected by often opaque methods.

The conference was designed around a central question, “What does it mean to create social impact with AI research?” Over the course of the event, at least one answer became clear: it means listening.

Indeed, a central takeaway from the conference was the importance of knowing what you don’t know, and of not being afraid to consult others when your expertise falls short. AI-based solutions will only ever solve problems on behalf of the many if they are designed in consultation with affected populations and relevant experts. No responsible discussion of the computational advances of new AI systems is complete without integrated interdisciplinary discussions regarding the social, economic, and political impacts of such systems. In this sense, the conference practiced what it preached – many attendees and speakers came from social work, biology, law, behavioral science, and public service.

The success of CRCS’s conference serves as a reminder that AI, for all its futuristic hype, is fundamentally just a tool. How we use it is determined by us: our cultural priorities and societal constraints. And as a tool, AI deserves better.

Recent advances in artificial intelligence algorithms have too frequently been used to the detriment of our civil liberties and society’s most vulnerable populations—further consolidating power in the hands of the powerful, and further exacerbating existing inequities. But reproducing and consolidating power is not what the world needs. Indeed, the AI that the world needs will not be developed by Silicon Valley technocrats in pursuit of profit, nor by Ivory Tower meritocrats in pursuit of publications. The AI we need must be developed by interdisciplinary coalitions in pursuit of redistributing power and strengthening democracy.

To that end, I offer the following six guiding questions for AI developers, as a roadmap to designing socially responsible systems:

- How might my tool affect the most vulnerable populations?

- Have I consulted with relevant academics, experts, and impacted people?

- How much do I know about the issue I am addressing?

- How am I actively countering the effects and biases of oppressive power structures that are inherently present in my data/tool/application?

- How could my tech be used in a worst-case scenario?

- Should this technology exist?

Answering these questions is unquestionably hard, but it’s only by engaging with them head-on that we stand any chance of truly changing the world for the better, with AI or with any other technology.